This introduction maps the emerging landscape where onchain tools and market incentives meet compute and verification. Galaxy Research framed how permissionless blockchain infrastructure enables GPU marketplaces, zkML for verifiable inference, and autonomous agents moving from proofs of concept to real deployments.

We survey the stack: compute marketplaces, zkML layers, agent platforms, and smart-contract tooling that together form a practical onchain approach. Expect a focus on real-world signals — live deployments, active communities, and measurable value rather than hype.

Readers in the United States will find a clear lens for buyers and builders: how throughput, heterogeneity tolerance, verification, token design, and compliance shape sustainability. The piece previews work from Bittensor, Gensyn, Render, Modulus, NEAR, Ocean, Fetch.ai and others to show how compute, verification, and incentives determine scale and trust.

Key Takeaways

- Onchain marketplaces can unlock global GPU supply for model work.

- Verifiable inference (zkML) adds auditability for post-training use.

- Token design and governance shape long-term sustainability.

- Practical evaluation favors real deployments and active communities.

- Interplay of compute, verification, and incentives drives reliability.

Why decentralized AI training matters for crypto in the United States

U.S. innovation benefits when open compute markets broaden access to idle GPUs across universities, labs, and startups.

Galaxy Research shows that permissionless GPU marketplaces and zkML verification democratize access to compute. This reduces reliance on a few labs and makes the overall blockchain ecosystem more resilient.

U.S. teams gain faster experimentation cycles through pooled resources, onchain payments, and governance. That lowers cost and increases participation by developers and users in regulated fields.

- Resilience: broader network reduces single points of failure.

- Auditability: verifiable pipelines meet compliance needs.

- Cost flexibility: community compute and token-based pricing diversify spend.

| Benefit | U.S. impact | Example use cases |

|---|---|---|

| Open compute | Faster development, lower lock-in | Healthcare model tuning |

| Verifiable outputs | Audit trails for compliance | Finance and public sector |

| Token incentives | Aligned contributor rewards | Energy management and services |

Understanding model training, post‑training, and inference for large models

Training large models follows a clear loop: forward pass, loss computation, backward pass, and optimizer updates. Each cycle nudges parameters to cut error. Repeat until the model meets validation goals.

From forward/backward passes to optimizer updates

Transformers process token sequences with attention that links context across long spans. The forward pass computes outputs from tokens. Loss measures deviation from targets. Backpropagation sends gradients back through the architecture so optimizers can adjust weights.

Why RL post‑training scales on heterogeneous, decentralized compute

Post‑training methods like supervised fine‑tuning and reinforcement learning refine behavior after pre‑training on broad corpora. RL excels when many independent rollouts run in parallel.

- Parallel rollouts: Agents run asynchronously on varied GPUs with minimal sync.

- Lower coordination: Fewer all‑reduce steps reduce bandwidth needs across NVLink or InfiniBand.

- Inference load: Serving fixed‑weight models still needs high memory bandwidth and gpu resources for large models.

| Item | Example | Impact |

|---|---|---|

| Cost | GPT‑4 scale | >$100M |

| Hardware | NVIDIA H100/B200 | ~$30,000 each |

| Interconnect | NVLink / InfiniBand | Critical for throughput |

Optimizers and communication‑efficient strategies further unlock distributed performance, while verification layers ensure contributions are auditable.

Decentralized vs. distributed training: what crypto adds beyond coordination

Opening model work to permissionless contributors forces new trade-offs in latency, trust, and rewards. Traditional distributed setups coordinate known participants inside fast data center interconnects. Permissionless systems that run decentralized must solve for internet links, variable GPUs, and unknown actors.

Crypto-native mechanisms add three practical layers: verifiable contributions, automated rewards, and transparent governance.

- Verification: onchain or offchain proofs (zkML, proof-of-training) stop poisoned updates.

- Incentives: tokens pay providers and validators, align long-term stewardship, and enable slashing for bad actors.

- Architecture: looser coupling and asynchronous updates favor resilience over tight throughput.

| Challenge | Crypto solution | Impact |

|---|---|---|

| High latency | Bandwidth‑aware optimizers | Lower sync overhead |

| Unverified updates | zk proofs / attestations | Auditability |

| Heterogeneous compute | Tokenized rewards | Broad participation |

Composability is a final advantage: protocols let data markets, agents, and compute stitch into end-to-end services. That shifts ownership from single operators to an open economic stack for model development and deployment.

Key roadblocks and how projects solve them: communication, verification, compute, incentives

Practical adoption depends on solving four interlocking problems: communication overhead, proof of correct work, mixed GPU fleets, and aligned incentives. Each requires engineering and protocol-level fixes to make model development reliable on public links.

Communication and async strategies

Syncing gradients and full parameters over standard internet creates long idle times and wasted compute power. Asynchronous updates and optimizer-aware compression cut wait time and boost throughput.

Proofs and verifiable contributions

Cryptographic attestations like zkML and proof-of-training let unknown providers prove correct work without exposing raw data. These reduce fraud risk and create audit trails for model outputs.

Heterogeneous GPU coordination

Geographic variance and mixed gpu types cause stragglers. Robust optimizers, adaptive scheduling, and checkpointing tolerate delays and faults while keeping convergence stable.

Incentives, ownership, and monetization

Tokenized rewards can pay timely, accurate updates and penalize bad actors via slashing conditions. Teams must balance open model weights with pay-for-use paths so providers and designers earn lasting revenue.

| Roadblock | Solution | Impact |

|---|---|---|

| High latency | Async updates + compression | Higher throughput |

| Unverified work | zk proofs / attestations | Auditability |

| Heterogeneous GPUs | Adaptive schedulers | Reliability |

Ongoing needs: better compression, adaptive scheduling, and standard verification APIs across services remain priorities for U.S. teams building resilient protocol stacks.

Market snapshot: AI crypto tokens and networks shaping 2025

By mid‑2025, a narrow group of tokens set the tempo for investor appetite and operational liquidity across blockchain infrastructure.

Capitalization trends and volatility across TAO, FET, RNDR, NEAR, OCEAN

Market size: AI‑focused crypto tokens held roughly $24B–$27B in total cap by mid‑2025, with sharp swings after the 2024 peaks.

Leaders include TAO (Bittensor), FET (Fetch.ai), RNDR (Render), NEAR, and OCEAN. TAO traded near $322 with ~ $2.9B cap. NEAR hovered near $2.68 and ~ $3.32B cap. RNDR showed daily moves near 19% during rallies.

Signals to watch: active communities, real‑world use cases, and listings

What matters most: real deployments, developer traction, exchange liquidity, and clear roadmaps tied to services and infrastructure delivery.

- Correlation: rallies in core leaders often lift the broader token basket.

- Economics: demand for compute and data from large models supports token utility and incentives.

- U.S. access: strong listings and custody options improve user participation and regulatory clarity.

| Token | Focus | Signal |

|---|---|---|

| TAO | decentralized training | protocol activity |

| RNDR | GPU rendering | usage spikes |

| OCEAN | data markets | dataset liquidity |

Product Roundup: decentralized AI training networks cryptocurrency projects

This roundup contrasts incentive-led, optimizer-driven, and speed-first approaches that power large model work.

Bittensor (TAO): proof‑of‑intelligence subnets

Bittensor runs permissionless subnets where contributors are ranked by output quality and rewarded in TAO. The system supports more than 118 specialized subnets and a fast-growing community.

Collective intelligence emerges as providers earn tokens when their model outputs score well in peer evaluations. TAO traded near $322 with about $2.9B market cap in mid‑2025.

Nous Research: DeMo, DisTrO, and Psyche

Nous Research focuses on optimizer and coordination gains. DeMo (Decoupled Momentum Optimization) cuts sync payloads by 10x–1000x using momentum decoupling and DCT compression.

DisTrO adds fault tolerance and load balancing and proved its value on a 15B‑parameter LLaMA‑style model run. Psyche layers asynchronous updates and ~3x payload compression with optional onchain accounting.

Qubic: speed‑first on‑chain compute

Qubic markets an ultra-fast, chain-aware compute stack for high-throughput ML deployment. It surpassed $1B market cap and draws attention for performance-first architecture.

| Approach | Strength | Signal to Watch |

|---|---|---|

| Bittensor | Incentives + evaluation | Subnet growth |

| Nous Research | Comm-efficient optimizers | Psyche deployments |

| Qubic | Chain-level performance | Benchmark results |

Why it matters: these options reduce sync costs, raise throughput on mixed hardware, and broaden access to training and model services for U.S. development teams and providers.

Optimizer innovation spotlight: DeMo and DisTrO from Nous Research

Nous Research introduced optimizer techniques that cut bandwidth and raise real-world throughput for mixed hardware clusters.

DeMo splits momentum into local and shared components. It shares only high-impact momentum signals and compresses the rest. This approach, built on AdamW, reduces communication by roughly 10x–1000x.

Decoupled momentum and compression

DCT-like compression removes low-energy coefficients so payloads shrink while preserving convergence signals. That keeps models moving forward with far less bandwidth.

Asynchronous updates and fault tolerance

DisTrO wraps DeMo into an optimizer framework that adds adaptive sync, load balancing, and fault tolerance. A December 2024 demo trained a 15B-parameter LLaMA-style model over ordinary internet links, validating feasibility.

- Psyche enables GPUs to send updates asynchronously while proceeding to the next step, boosting utilization.

- Models using RL post-training gain more from async rollouts and sparser sync.

- Verification hooks combine optimizer telemetry with cryptographic attestations to improve trust in open participation.

| Feature | Benefit | Real-world signal |

|---|---|---|

| Decoupled momentum | Less bandwidth | 10x–1000x reduction |

| DCT compression | Smaller payloads | Preserved convergence |

| Adaptive sync (DisTrO) | Stability on mixed hardware | 15B model demo (Dec 2024) |

| Psyche async | Higher GPU utilization | Additional ~3x compression |

Practical wins include lower operational costs, easier scaling across heterogeneous infrastructure, and readiness for production-grade network participation. Teams should also review broader analysis in the convergence report for context on protocol-level trade-offs.

Decentralized compute marketplaces: GPU access for training and inference

Global marketplaces now pool spare GPU capacity so smaller teams can run large model workflows without heavy cloud bills. These platforms match idle hardware with machine learning jobs and add layers for verification, payments, and orchestration.

Gensyn: proof‑of‑training and trustless job execution

Gensyn connects ML engineers to idle GPUs via a blockchain-based marketplace. It uses cryptographic proof‑of‑training so work can be verified when outsourced to untrusted providers.

Render (RNDR): GPU rendering and generative workflows

Render rents idle GPU compute for graphics and generative tasks. It supports media, simulation, and some model workflows and has shown strong market signals, including volatile rallies in sector leaders.

Akash Network: open cloud for AI workloads

Akash provides an open marketplace for cloud and AI workloads. It offers an alternative to centralized infrastructure and complements pipelines for both training and inference.

| Platform | Strength | Pricing |

|---|---|---|

| Gensyn | Verifiable jobs | Market rates, escrow |

| Render (RNDR) | Graphics and generative | Spot pricing, usage-based |

| Akash | Cloud workloads | Lower-cost alternatives |

How teams benefit: lower barriers for development, on-demand inference capacity for latency-sensitive apps, and new monetization paths for providers through tokens and service agreements.

Verifiable AI and zkML: running and checking models onchain

Zero‑knowledge proofs now let offchain models prove their results to onchain systems without exposing inputs or weights. This makes verifiable inference practical for smart contracts and foracles that require high integrity from external compute.

Modulus Labs builds circuits that prove correct model outputs while protecting sensitive data. Their approach ties succinct proofs to model execution, so a smart contract can accept a claim only after onchain verification. That raises auditability and user trust.

- Define zkML: proofs that verify inference without revealing inputs or weights.

- Workflow: offchain run → proof generation → onchain verification → contract state update.

- Privacy: keeps raw data private while providing provenance for outputs.

Use cases include DeFi trading signals, prediction markets, and autonomous onchain agents that need deterministic checks before contracts change state. Performance gains come from batching, aggregated proofs, and specialized circuits that cut proof costs.

| Feature | Benefit | Impact |

|---|---|---|

| zk proofs | Trustless verification | Contracts accept outputs without raw data |

| Proof batching | Lower gas and latency | Scales inference for many users |

| Interchain format | Cross‑chain compatibility | Protocol portability for services |

Roadmap needs: common proof formats, developer tooling, and standards so protocols and smart contracts can integrate verifiable outputs across chains and workflows.

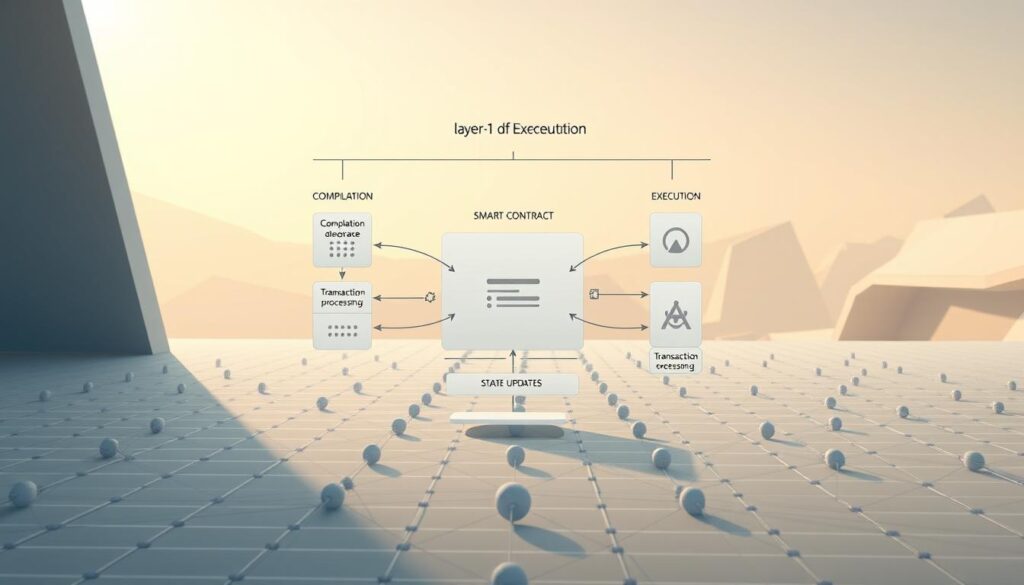

AI‑ready smart contracts and Layer‑1 infrastructure

Layer‑1 platforms now embed model logic so contracts can act on inference without long waits. This trend pushes compute and verification closer to onchain flows and makes contract-triggered decisions practical for real apps.

Cortex: onchain model execution

Cortex runs model execution inside a specialized virtual machine so smart contracts can call inference directly. That design lets contracts use model outputs as immediate inputs for autonomous logic and conditional payouts.

Oraichain: oracle services and verifiable data

Oraichain combines oracle delivery with validation. Instead of only relaying raw data, it verifies model-generated results onchain so contracts accept vetted signals from offchain runs.

NEAR Protocol: low‑fee tooling for developers

NEAR offers developer tooling like Near Tasks for labeling and validation, plus sub‑cent fees that make frequent contract interactions affordable. NEAR’s ecosystem supports data workflows that feed verifiable outputs into contracts.

Architectural trade-off: full onchain inference simplifies trust but raises cost and latency. Many builders prefer offchain runs plus proofs or validated oracles to balance gas and performance.

- Typical flow: training offchain → proofs/oracles verify outputs → contracts trigger inference or updates.

- Result: more composable protocols and cheaper user interactions for production dApps.

| Platform | Primary value | Trade‑off |

|---|---|---|

| Cortex | Direct onchain inference | Higher gas, lower latency |

| Oraichain | Verified model outputs | Relies on oracle availability |

| NEAR | Low fees, dev tools | Offchain tooling required |

Data is the new fuel: decentralized data marketplaces for training

Data pipelines have become strategic infrastructure for model development and deployment. Quality, diversity, and governance shape downstream model utility.

Ocean Protocol: tokenized datasets, compute‑to‑data, and staking

Ocean Protocol tokenizes datasets so publishers monetize information as tradable digital assets. Its compute‑to‑data model lets algorithms run where the records live. That preserves raw data and improves compliance for sensitive sectors.

Designing data pipelines for privacy and monetization

Well‑designed pipelines treat datasets as strategic assets. Curated supply, provenance checks, and curation governance determine whether a model training run succeeds.

- Compute‑to‑data: train without moving raw files, improving data privacy and auditability.

- Tokenization: access tokens, staking, and curation align incentives for publishers, providers, and users.

- Verification: proofs and audits validate lineage and suitability for machine learning tasks.

Developer workflows tie discovery, access negotiation, and job orchestration into one flow. Tools let engineers find datasets, set terms, and run model training with on‑site compute and protocol primitives.

| Feature | Benefit | U.S. Consideration |

|---|---|---|

| Tokenized access | Monetization for publishers | Supports compliant revenue models |

| Compute‑to‑data | Protects sensitive records | Helps meet privacy laws |

| Staking & curation | Market health and quality | Aligns incentives for trustworthy providers |

Bottom line: decentralized data markets broaden access to valuable datasets while keeping privacy intact. For U.S. teams, clear governance and provenance are essential to scale model training responsibly.

Autonomous agents and composable services across crypto

Composable agents act as persistent services that negotiate, execute tasks, and interact with smart contracts. They let developers stitch data, verification, and compute into workflows that run with minimal oversight.

Fetch.ai: agent-based automation for DeFi, energy, and services

Fetch.ai deploys autonomous agents for trading, energy optimization, and marketplace tasks. Its protocol incentives encourage real‑time actions across mobility and DeFi, and the platform is central to the ASI initiative.

Virtuals Protocol: tokenized agents and creator ecosystems

Virtuals Protocol lets users create, own, and monetize tokenized agents. By late 2024 its ecosystem sat near a $1.6–1.8B market cap and remained active into mid‑2025 with daily agent launches and strong community engagement.

How they compose: agents plug into data feeds, verifiable compute, and model endpoints to form modular services. This mirrors collective intelligence by combining many specialized agents into larger workflows.

- Common tasks: market monitoring, resource optimization, and service coordination with onchain accountability.

- Developer tools: SDKs and templates speed deployment and lower barriers for users and development teams.

- Operational notes: inference latency and external compute reliability are key constraints for mission‑critical tasks.

| Capability | Example | Benefit |

|---|---|---|

| Autonomy | Trading bots | Persistent strategy execution |

| Tokenization | Monetized agents | Creator revenue streams |

| Integration | Data + verification | Composable services |

Project briefs beyond the core stack: infrastructure and applications

New offerings combine web hosting, commodity GPU access, and persistent digital characters to create fresh paths for developers and users.

OpSec Network: modular cloud, GPU hosting, and web services

OpSec Network bundles web hosting, CloudVerse domains, router devices, and GPU hosting into one modular stack for providers and users.

The stack includes RDP-style access, AI-assisted ops, and an AI-driven consensus layer that aims to boost uptime and performance for model runs and application hosting.

Hardware onboarding supports both commodity and enterprise GPU hardware, with routing and security controls to manage remote nodes and reduce attack surface.

Artificial Liquid Intelligence (ALI): iNFTs and AI‑native digital assets

ALI issues interoperable iNFTs that combine generative content with blockchain-verified ownership. The ALI token powers creation, royalties, and secondary markets.

iNFTs enable persistent characters for virtual assistants, gaming avatars, and interactive guides that carry state across dApps.

Smart contracts can automate access rights, royalties, and cross‑platform interoperability to support developer business models and ongoing user engagement.

How these fit the stack: OpSec and ALI extend core compute and verification by adding hosting services, hardware reach, and fresh digital assets that increase user-facing value.

| Offering | Primary value | Key users |

|---|---|---|

| OpSec Network | Modular cloud + GPU hosting, RDP access | Developers, providers, edge hosts |

| CloudVerse | Web hosting + router devices | SMBs, dApp operators |

| ALI iNFTs | Dynamic digital assets with tokenized royalties | Creators, game studios, users |

- Applications: virtual assistants, interactive guides, gaming avatars, and cross‑dApp experiences with persistent identity.

- Market signals: developer adoption, tooling maturity, and steady user traction across verticals will determine long‑term uptake.

Evaluation criteria for buyers and builders: compute, models, data, verification

Effective procurement starts with measurable benchmarks for throughput, latency, and verification rigor.

Throughput, latency, and heterogeneity tolerance

Measure samples per second, end‑to‑end latency, and effective utilization across varied GPU types. Run pilot jobs that mirror expected model sizes and batch patterns.

Key tests include stress runs under poor link conditions and mixed hardware to surface stragglers and scheduler behavior.

Token design, incentives, and governance for sustainability

Review emission schedules, reward splits for providers and model designers, and governance motions that enable upgrades without central control. Check dispute and slashing mechanics embedded in contracts.

- Synchronization strategy: async vs sync and optimizer gains (e.g., momentum decoupling).

- Verification: zkML, proof-of-training, audits, and onchain dispute paths.

- Data pipelines: provenance, access controls, compute-to-data, and curation incentives.

- Developer experience: SDK maturity, docs, and marketplace integration.

| Criteria | Metric | Why it matters |

|---|---|---|

| Throughput | Samples/sec | Predicts cost and time to train models |

| Heterogeneity | Utilization % across GPUs | Shows resilience to mixed hardware |

| Verification | Proof type & audit logs | Ensures work integrity for contracts |

| Economics | Emission & reward split | Signals long‑term provider incentives |

Security, privacy, and compliance for U.S. teams

Practical deployment in regulated U.S. markets starts with clear controls over who can query models and how results are verified.

Data privacy, provenance, and auditable AI outputs

Handle sensitive datasets carefully. Use compute‑to‑data and encrypted pipelines so raw records never leave approved environments. That reduces exposure during both model runs and inference.

Provenance matters. Track dataset lineage, preprocessing steps, and model changes in immutable logs. This makes audits and regulatory responses faster and more reliable.

Verification builds trust. zk proofs and onchain attestations help confirm that a model produced a claimed output without revealing private inputs. That lowers tamper risk and supports contract‑level decisions.

- Embed access policies in smart contracts and protocol rules to gate who can call models and when.

- Vetting providers, using secure enclaves, and continuous contributor monitoring reduce operational risk for open participation.

- Document evaluation metrics and change history so auditors and stakeholders can trace model behavior.

| Risk | Mitigation | U.S. relevance |

|---|---|---|

| Data leakage | Compute‑to‑data, encryption | Supports HIPAA, CCPA compliance |

| Tampering | zk proofs, onchain attestations | Audit trails for regulators and users |

| Unauthorized queries | Smart contract access controls | Policy enforcement via contracts |

Clear user communication is essential. Explain model limits, provide dispute paths, and publish change logs. With careful use of crypto primitives, U.S. teams can meet compliance while keeping the benefits of open infrastructure and service composition.

Use cases to prioritize: finance, healthcare, supply chain, and agents

Pick pilots where verification, privacy, and cost map cleanly to business risk. Start with workloads that need audit trails and controlled data flows. Galaxy Research notes that RL post-training fits well with asynchronous, mixed‑provider compute.

Matching workloads to compute and verification needs

Finance: risk models, surveillance, and trading agents benefit from verifiable inference and auditable pipelines. These use cases demand low tamper risk and clear logs for regulators.

Healthcare: clinical NLP and imaging triage pair well with compute‑to‑data and privacy preserving approaches. Keep patient records on approved hosts and use proofs for model outputs.

Supply chain: optimization gains from shared data, provenance, and near‑real‑time inference. Track lineage and use lightweight proofs to validate decisions across partners.

- Agents: automation handles routine ops and contract coordination with minimal human oversight.

- Match latency, throughput, and data sensitivity to the right compute and verification stack.

- Start with RL post‑training or fine‑tuning tasks that tolerate async updates and heterogeneous providers.

- Choose providers based on reliability history, proof mechanisms, and SLAs; run small pilots to validate accuracy and cost before scaling.

Where decentralized AI and crypto converge next

Expect tighter bridges between marketplace compute, verifiable inference, and agent tooling that make end‑to‑end model delivery routine.

Market signals from 2025 (roughly $24–27B in token cap led by TAO, FET, RNDR, NEAR, OCEAN) show demand for practical, production-ready stacks. Galaxy Research stresses the central test: prove clear advantages over centralized infrastructure.

Nous Research advances make communication‑efficient model work on mixed hardware plausible. That supports more asynchronous approaches that lower dependence on specialized interconnects and costly GPU fleets.

Looking ahead, expect common proof formats, standardized attribution, and revenue-sharing protocols to emerge. U.S. enterprises will favor stacks that pair privacy-preserving proofs with clear audit trails.

Practical guidance: prioritize pilots that measure real results, require transparent verification, and align economics so providers and users capture lasting value.

No comments yet