Digital ledger systems face a critical challenge as they grow. Traditional architectures require every participant to process every operation. This creates bottlenecks that limit growth and increase costs.

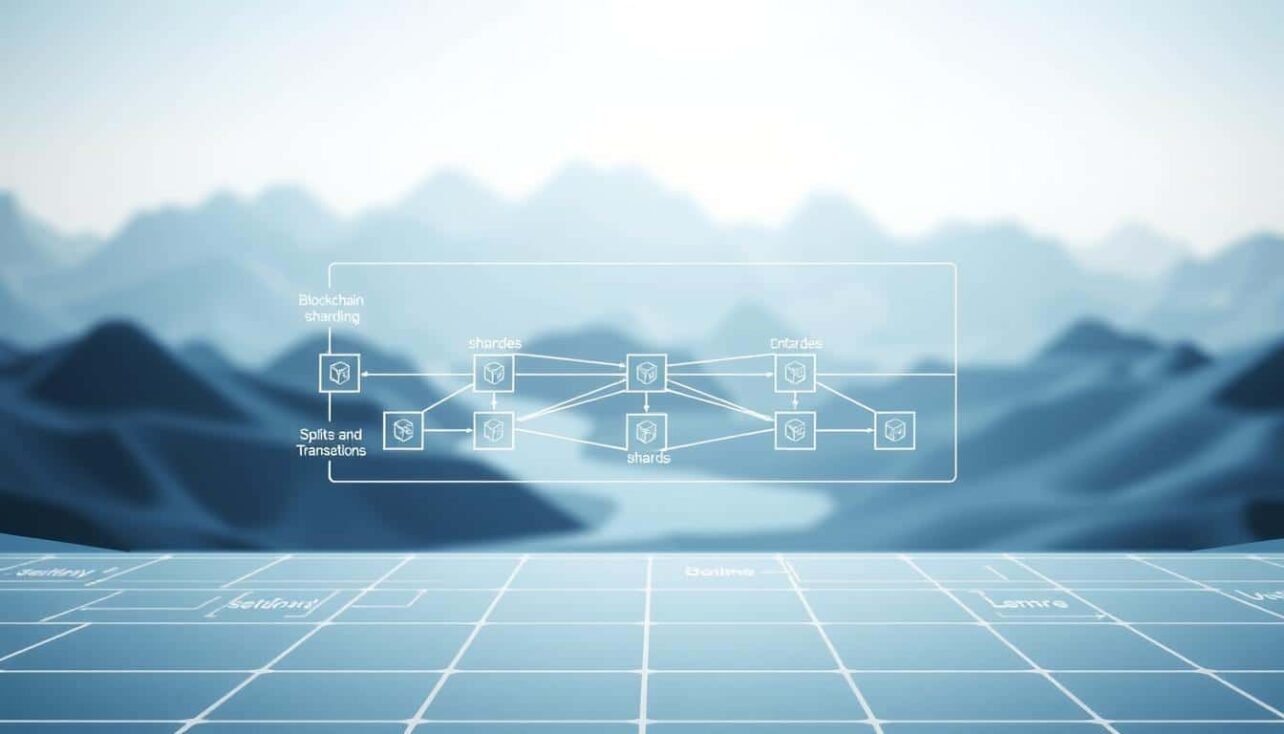

A revolutionary approach changes this dynamic. It divides the workload across multiple segments called shards. Each shard processes its own set of operations independently.

This method borrows from database management concepts. It enables parallel processing across different parts of the network. The result is significantly improved performance without sacrificing security.

Major platforms like Ethereum and Zilliqa have implemented this technology. Their success demonstrates the practical benefits for real-world applications. This guide explores how sharding transforms decentralized systems.

We will examine the core concepts, different implementation methods, and security considerations. You will learn how this approach supports mass adoption and enterprise use cases.

Key Takeaways

- Sharding divides a blockchain into smaller, manageable pieces called shards.

- Each shard processes transactions independently, enabling parallel operation.

- This architecture significantly increases the total transaction capacity of the network.

- It reduces the hardware requirements for individual network participants.

- Cross-shard communication presents unique technical challenges that developers must solve.

- Real-world implementations show practical success on platforms like Ethereum and NEAR.

- Proper sharding design maintains security while improving scalability.

Introduction to Blockchain Sharding Horizontal Scaling Solution

The original blueprint for distributed ledgers, while robust, struggles to keep pace with global demand. In conventional setups, every participant must verify and record every single operation. This creates a significant bottleneck as user numbers and activity surge.

This is where network partitioning offers a transformative approach. The method involves dividing the entire system into smaller, more manageable segments. Each segment operates with a high degree of autonomy.

These independent units process their own sets of operations and smart contracts. This parallel workflow is the key to unlocking greater capacity. The total throughput of the system increases substantially as a result.

Each partition functions like a miniature version of the main network. It has its own group of validators and maintains a portion of the overall data. A coordination mechanism ensures all parts remain synchronized and secure.

The adoption of this architecture by major platforms highlights its practical value. It addresses the scalability trilemma by enhancing performance without sacrificing decentralization or security. This makes it a foundational technology for the next generation of decentralized applications.

| Architectural Feature | Traditional Model | Sharded Model |

|---|---|---|

| Node Data Burden | Every node stores the entire network state | Nodes only store data relevant to their shard |

| Transaction Processing | Sequential; all nodes process all transactions | Parallel; multiple shards process transactions simultaneously |

| Scalability Potential | Limited by single-node capacity | Scales horizontally by adding more shards |

| Hardware Requirements | High for full nodes | Reduced, enabling greater participation |

Scalability Challenges in Modern Blockchain Networks

Traditional consensus mechanisms, while secure, impose significant performance tradeoffs as participation grows. Popular networks like Bitcoin and Ethereum demonstrate these inherent limitations during periods of high demand.

The core issue stems from every node processing every transaction. This creates a direct relationship between network size and processing bottlenecks.

Impact on Transactions Per Second and Latency

Throughput capacity remains a major constraint. Bitcoin handles about 7 transactions per second, while Ethereum processes around 30 transactions per second. These numbers pale compared to traditional payment systems.

As blockchains grow, confirmation times increase. Larger data sets require more time to propagate across distributed nodes. This network latency negatively impacts user experience.

| Performance Metric | Traditional Approach | Modern Requirements |

|---|---|---|

| Transactions Processed | Limited by single-chain capacity | Demands thousands per second |

| Confirmation Time | Minutes to hours during peaks | Seconds for mainstream adoption |

| Network Efficiency | All nodes validate all data | Specialized processing needed |

| Cost Structure | Fees spike during congestion | Stable, predictable pricing |

Overcoming Bottlenecks in Network Load

Peak usage periods reveal fundamental limitations. High transaction volumes create backlogs that can take hours to clear. This load imbalance wastes computational resources.

Overcoming these challenges requires innovative approaches. As explored in this comprehensive guide to scalability solutions, distributed architectures can significantly improve network performance while maintaining security.

The scalability trilemma forces difficult choices between decentralization, security, and performance. Modern blockchains must address this load distribution challenge to achieve mass adoption.

Fundamentals of Blockchain Sharding: Concepts & Terminology

Database management has long employed a clever technique for handling massive information loads. This approach divides large datasets into smaller, more manageable pieces called partitions.

What is Sharding in Simple Terms?

Think of sharding like organizing a massive library. Instead of one person trying to manage every book, the collection gets divided into sections. Each section has its own librarian who specializes in that area.

In technical systems, this means splitting data into smaller subsets that live on separate servers. Each machine only handles queries for its assigned partition. This shared-nothing architecture prevents any single point from becoming overwhelmed.

The same principle applies to distributed networks. Validator nodes get assigned to specific segments rather than processing everything. This division of labor allows multiple transactions to occur simultaneously across different segments.

A coordination mechanism links all the pieces together. This backbone collects information from each partition and maintains overall system integrity. The approach significantly reduces computational demands on individual participants.

Understanding this architecture helps grasp how networks achieve greater capacity. Partitioned segments maintain local consensus while contributing to global security through coordinated verification processes.

Benefits and Performance Enhancements of Sharding

The performance gains from dividing network responsibilities are immediately apparent in transaction processing efficiency. This architectural approach transforms how distributed systems handle increasing user demands.

By separating validator duties across specialized groups, systems achieve remarkable speed improvements. Each segment operates independently while contributing to overall network integrity.

Improved Transaction Speed and Throughput

Parallel processing across multiple segments represents the primary advantage. Each partition handles its own set of operations simultaneously.

This division dramatically increases total system capacity. Networks can multiply their throughput by the number of active segments.

Ethereum’s evolution toward this architecture aims for substantial improvements. The goal reaches up to 100,000 transactions processed per second when fully implemented.

Confirmation times decrease significantly during peak usage. Each segment handles only a fraction of the total load, preventing congestion delays.

Reduced Hardware Requirements for Node Operators

Validator participation becomes more accessible with reduced computational demands. Each node only processes operations relevant to its assigned segment.

Storage requirements decrease proportionally with the number of partitions. Participants maintain only their segment’s data rather than the complete history.

This democratization allows broader participation using consumer-grade equipment. The approach lowers economic barriers that previously limited network validation.

Bandwidth consumption per node decreases substantially. Validators synchronize only relevant data rather than every network transaction.

Optimizing Transaction Throughput with Sharding Solutions

The efficiency of transaction handling in partitioned networks depends on intelligent resource allocation strategies. Workload distribution algorithms ensure balanced processing across all segments.

Dynamic adjustment mechanisms respond to changing volumes. During peak periods, high-load segments can split into smaller units. This prevents congestion and maintains smooth operations.

Transaction routing protocols determine which segment processes each operation. They consider account addresses and contract locations. Optimal routing minimizes coordination between different segments.

Parallel execution frameworks enable simultaneous processing across multiple segments. Each unit works independently without waiting for others. This maximizes resource utilization and achieves near-linear scalability.

Performance monitoring systems track throughput metrics across the entire network. They provide data-driven insights for configuration decisions. This helps identify optimization opportunities in real-time.

The effectiveness of these approaches depends on application characteristics. Transaction patterns and interaction frequency influence the final performance outcomes.

Exploring Types of Sharding in Blockchain Networks

Distributed systems employ diverse partitioning strategies to optimize performance across different operational layers. Each approach targets specific bottlenecks while maintaining overall network integrity.

State Sharding vs. Transaction Sharding

State partitioning divides the ledger’s complete data across multiple segments. Each segment maintains responsibility for its assigned accounts and contract storage.

This method requires sophisticated coordination for operations spanning different segments. It represents the most complex implementation but offers significant storage efficiency.

Transaction distribution focuses on processing workload allocation. Different segments validate various operations simultaneously, potentially sharing a unified global state.

Network Sharding and Dynamic Sharding

Network segmentation organizes validator groups into specialized units. Each group handles consensus for its designated segment without participating in others’ operations.

This approach enhances security through periodic validator rotation. It prevents concentrated attacks on individual segments.

Dynamic adjustment mechanisms automatically respond to changing demand. Systems like The Open Network demonstrate extreme scalability potential with hierarchical structures supporting billions of segments.

Hybrid approaches combine multiple partitioning types to leverage complementary strengths. They optimize resource utilization while maintaining system security and performance.

Consensus Mechanisms in Sharded Environments

Achieving unified consensus in segmented architectures presents unique technical challenges. These systems must coordinate agreement across multiple independent partitions while maintaining overall network integrity.

Proof of Work and Proof of Stake

Partitioned Proof of Work assigns miners to specific segments where they solve cryptographic puzzles. This approach can concentrate computational power unevenly across different partitions.

Proof of Stake adaptations assign validators based on their staked tokens. Cryptographic randomness prevents attackers from predicting segment assignments, enhancing overall security.

Delegated and Byzantine Fault Tolerant Models

Byzantine Fault Tolerant protocols enable fast finality within individual segments. They tolerate malicious validators as long as honest participants maintain a two-thirds majority.

Delegated models allow token holders to elect a limited validator set. This reduces participant numbers for faster consensus but raises centralization concerns.

Overcoming Cross-Shard Communication Complexities

Inter-segment interactions introduce unique technical hurdles that require sophisticated solutions. When operations involve multiple partitions, specialized coordination mechanisms become essential for maintaining system integrity.

These communication challenges represent the most significant engineering obstacle in segmented architectures. Operations spanning different partitions demand careful protocol design to prevent data inconsistencies.

Ensuring Data Consistency Across Shards

Maintaining accurate information across independent segments presents substantial difficulties. Multiple partitions attempting to modify shared state can create conflicts that threaten system reliability.

Sophisticated protocols prevent double-spending and ensure transaction atomicity. They maintain causal ordering of dependent operations across different segments.

Two-phase commit systems adapted from distributed databases provide transaction atomicity. A coordinator collects votes from all participating segments before committing changes.

Secure Protocols for Cross-Shard Transactions

Security measures must prevent various attack vectors in inter-segment operations. These include double-spending across partitions and replay attacks where transactions are maliciously resubmitted.

Receipt-based communication enables segments to prove transaction execution to others. Cryptographic receipts allow efficient verification without requiring direct communication between all involved parties.

| Communication Approach | Synchronous Protocol | Asynchronous Protocol |

|---|---|---|

| Coordination Timing | All segments process simultaneously | Originating segment processes first |

| Data Consistency | Immediate atomic execution | Eventual consistency with confirmation |

| Performance Impact | Higher latency due to coordination | Reduced overhead, improved throughput |

| Security Considerations | Prevents partial execution failures | Requires robust confirmation mechanisms |

Transaction latency depends on network propagation delays and the number of involved segments. The consensus mechanism’s block production rate also significantly impacts overall performance.

Adaptive Shard Management Strategies for Dynamic Workloads

The ability to automatically adjust network configurations based on real-time demand patterns separates advanced systems from static ones. This dynamic approach ensures optimal performance during both peak usage and quiet periods.

Continuous monitoring tracks key metrics across all segments. The system evaluates transaction volume, resource utilization, and processing latency for each shard.

Shard Splitting and Merging Techniques

When a shard exceeds predefined load thresholds, splitting occurs automatically. This process divides the overloaded segment into smaller, more manageable units.

Each new shard inherits a portion of the original segment’s accounts and data. Validator assignments redistribute to maintain balanced participation across the network.

Merging combines underutilized shards when their combined load decreases. This consolidation reduces overhead and optimizes resource allocation throughout the system.

| Management Action | Splitting Process | Merging Process |

|---|---|---|

| Trigger Condition | High transaction volume or resource utilization | Low combined load across multiple shards |

| Data Handling | State data redistributed across new shards | Accounts and storage consolidated into single shard |

| Network Impact | Increases total shard count temporarily | Reduces overall shard count for efficiency |

| Implementation | Common across many distributed networks | Pioneered exclusively by The Open Network |

The Open Network demonstrates true elastic scalability with bidirectional adjustments. This flexibility represents the pinnacle of adaptive system design for modern transactions.

Real-World Implementations: Ethereum, Zilliqa, TON, and NEAR

Real-world platforms demonstrate how network segmentation operates in production environments. These implementations reveal diverse strategies for handling increased computational demands.

Case Study: Ethereum’s Evolution and Proto-Danksharding

Ethereum’s approach transformed significantly over time. Originally planning 64 segments with 128 validators each, developers shifted strategy.

The new design focuses on data availability for Layer 2 solutions. Proto-danksharding introduces blob-carrying transactions that attach large data chunks.

This allows rollups to post transaction data at reduced costs. The Cancun-Deneb upgrade will implement this innovative feature.

Insights into Zilliqa, TON, and NEAR Models

Zilliqa pioneered practical implementation in 2017. Its architecture uses four segments that process transactions in parallel.

However, nodes still maintain complete network state. This limits storage benefits while improving throughput.

The Open Network features extremely ambitious capacity. It supports over 1 billion workchains with virtually unlimited segments.

NEAR Protocol’s Nightshade progressed through multiple phases. Simple Nightshade launched in November 2021 with four segments.

The system distinguishes between full validators and chunk-only producers. This democratizes participation with reduced hardware requirements.

| Platform | Implementation Year | Segment Count | Key Innovation |

|---|---|---|---|

| Zilliqa | 2017 | 4 shards | First production implementation |

| Ethereum | 2023 (proto-danksharding) | Data-focused | Blob-carrying transactions |

| TON | Ongoing | Up to 2^60 per workchain | Smart contract segmentation |

| NEAR | 2021 (Phase 0) | 4+ (dynamic) | Chunk-only producers |

These platforms represent different philosophical approaches. Zilliqa and NEAR focus on execution distribution, while Ethereum prioritizes data availability solutions.

: Innovations: Proto-Danksharding and Advanced Scaling Methods

A groundbreaking innovation in distributed ledger technology involves separating data availability from transaction execution through specialized blob mechanisms. This approach focuses on optimizing how information flows through the system.

Proto-danksharding represents Ethereum’s strategic pivot toward data-focused partitioning. Instead of traditional execution methods, it provides cost-effective storage for Layer 2 solutions.

Blob-Carrying Transactions and the Cancun Upgrade

Blob-carrying transactions introduce a new format for handling large data volumes. Each blob can store up to 125 kilobytes of raw information.

These Binary Large Objects remain separate from the Ethereum Virtual Machine. Smart contracts cannot access blob data directly.

The Kate-Zaverucha-Goldberg commitment scheme enables efficient verification. Validators confirm data availability without downloading complete blobs.

Temporary storage lasting weeks reduces long-term node burdens. Rollups can retrieve and archive data permanently if needed.

| Feature | Traditional Approach | Proto-Danksharding |

|---|---|---|

| Data Handling | Permanent calldata storage | Temporary blob storage |

| Transaction Cost | High Layer 2 fees | Up to 100x reduction |

| Verification Method | Full data download | KZG polynomial commitments |

| Network Impact | Increasing storage burden | Reduced long-term load |

The Cancun-Deneb upgrade implements these innovations. Despite delays from 2023 to 2024, it marks a critical milestone for Ethereum’s scaling roadmap.

: Ensuring Security and Fault Tolerance in Sharded Blockchains

Protecting partitioned systems demands specialized approaches to prevent targeted attacks on individual segments. Each segment operates with fewer validators than traditional networks, creating unique security challenges.

Mitigating Sybil Attacks and Shard-Level Threats

Random validator assignment prevents attackers from concentrating malicious nodes in specific segments. Cryptographic randomness ensures unpredictable distribution across the entire network.

Periodic reconfiguration reshuffles validators at regular intervals. This limits the time attackers can control any single segment. Even successful infiltration becomes temporary and less damaging.

Economic barriers like staking requirements make Sybil attacks prohibitively expensive. Each validator must lock substantial collateral that can be slashed for malicious behavior.

Cross-segment operations require specialized protocols to prevent double-spending and replay attacks. Fraud proofs and cryptographic receipts ensure transaction integrity across different segments.

Continuous monitoring systems detect anomalies in validator behavior and transaction patterns. Rapid response mechanisms include validator slashing and emergency reconfiguration when threats are identified.

: Enhancing Data Synchronization and Network Communication

State consistency mechanisms represent a critical engineering challenge in segmented architectures, where multiple independent units must maintain synchronized information. Efficient data flow ensures all participants operate with identical views of the system state.

Consensus Efficiency and State Synchronization Techniques

Merkle tree structures provide cryptographically efficient mechanisms for state verification. Nodes can compare root hashes to detect inconsistencies quickly. This approach minimizes bandwidth usage while maintaining security.

Incremental synchronization techniques transmit only data changes rather than complete snapshots. This optimization proves valuable during validator rotation or segment adjustments. The approach significantly reduces processing requirements.

Cross-segment consistency presents unique challenges as different units may progress at varying rates. Coordination protocols ensure all segments eventually converge to consistent views. Research in this area, such as the advanced cryptographic techniques, demonstrates innovative approaches to maintaining integrity across distributed systems.

| Synchronization Method | Bandwidth Usage | Recovery Time | Security Level |

|---|---|---|---|

| Full State Download | High | Slow | Maximum |

| Incremental Updates | Low | Fast | High |

| Merkle Proof Verification | Minimal | Instant | Cryptographic |

| Gossip Protocol | Medium | Variable | Decentralized |

Dispute resolution mechanisms detect state inconsistencies through decentralized processes. Validators submit fraud proofs demonstrating invalid transitions. Economic penalties incentivize honest behavior throughout the network.

: Integrating Smart Contracts within Sharded Ecosystems

Smart contract functionality introduces new dimensions to partitioned network architectures. These self-executing agreements must operate efficiently across distributed segments while maintaining security and consistency.

Strategic placement decisions determine which segment hosts each contract. Developers consider expected transaction volume and interaction patterns. Account distribution across segments also influences these choices.

Smart Contract Deployment and Inter-Shard Communication

The Open Network pioneered contract segmentation, allowing individual agreements to split into multiple instances. Each instance handles specific functionality while coordinating with others.

Fungible token implementations demonstrate this approach effectively. Separate contract instances manage individual user balances. A parent contract tracks total supply and metadata.

Non-fungible token collections deploy unique contracts for each asset. This distributes storage and processing automatically as collections grow. The system maintains functionality across multiple segments.

| Contract Type | Deployment Strategy | Segment Interaction | Performance Benefit |

|---|---|---|---|

| Fungible Tokens | Balance instances per user | Minimal cross-segment calls | Distributed storage load |

| NFT Collections | Individual asset contracts | Collection-level coordination | Automatic growth scaling |

| Complex DApps | Functional module separation | Protocol-mediated calls | Parallel execution |

| Governance Systems | Voting instance per segment | Result aggregation | Reduced contention |

Cross-segment communication presents significant complexity. Contracts on different segments require specialized protocols for interaction. These systems must verify execution results and handle failures.

Atomic execution ensures multi-contract transactions complete successfully across all involved segments. This prevents partial execution that could create inconsistent states. Developers must design for these distributed environments.

: Future Trends and Emerging Technologies in Blockchain Sharding

Artificial intelligence and quantum computing represent the next frontier for segmented architectures. These advanced technologies promise to revolutionize how distributed networks handle increasing demands.

Machine learning algorithms can predict transaction patterns with remarkable accuracy. This enables automatic adjustment of segment boundaries before congestion occurs. The approach minimizes communication overhead between different partitions.

AI Integration and Hardware Advancements

Specialized cryptographic accelerators and high-bandwidth memory architectures are transforming node capabilities. Individual participants can now handle larger segments or join multiple partitions simultaneously.

Zero-knowledge proof technology converges with partitioning methods to enhance privacy. Segments can verify correct execution without revealing sensitive transaction details. This dual benefit addresses both scalability and confidentiality concerns.

Innovative Load Distribution Approaches

Game-theoretic mechanisms incentivize users to distribute activities across underutilized segments. This achieves balanced workloads without forced account migrations. The voluntary approach maintains network decentralization.

Application-specific segments allow customization for particular use cases like DeFi or gaming. Specialized consensus mechanisms and transaction formats maximize performance for target applications.

| Emerging Technology | Primary Benefit | Implementation Timeline |

|---|---|---|

| AI-Powered Optimization | Predictive load balancing | 2-3 years |

| Quantum-Resistant Cryptography | Future-proof security | 3-5 years |

| Cross-Chain Protocols | Interconnected ecosystem | 1-2 years |

| Hardware Accelerators | Enhanced node performance | Currently available |

The convergence of partitioning with Layer 2 solutions creates hybrid architectures. These combinations deliver comprehensive scaling benefits for next-generation applications.

: Conclusion

Network architecture evolution continues to redefine what’s possible for decentralized applications worldwide. This partitioning approach represents a fundamental breakthrough in addressing capacity constraints that previously limited growth.

Real-world implementations across major platforms demonstrate the technology’s practical viability. Each deployment reflects unique design philosophies tailored to specific operational goals.

Security considerations remain absolutely critical in these distributed systems. Proper validator assignment and cross-segment protocols ensure robust protection against potential threats.

The complexity of inter-segment communication presents ongoing technical challenges. However, continuous research yields increasingly efficient methods for maintaining data consistency.

Looking forward, hybrid approaches combining multiple scaling techniques show great promise. These multi-layered architectures will likely define the next generation of distributed systems.

As this technology matures, standardized protocols and developer tools are emerging. This makes partitioned architectures more accessible for broader implementation.

Ultimately, the success of these innovations will determine whether distributed systems can achieve their full transformative potential. The ability to process massive transaction volumes while maintaining security is crucial for mainstream adoption.

FAQ

What is the main goal of implementing sharding in a blockchain?

The primary goal is to significantly increase the network’s capacity to handle a higher volume of transactions per second. By dividing the workload across multiple, smaller groups of nodes called shards, the system processes operations in parallel, overcoming the bottlenecks of traditional single-chain architectures.

How does sharding improve transaction speed and reduce fees?

It enhances speed by allowing each shard to process its own set of transactions and smart contracts simultaneously. This parallel processing drastically boosts overall throughput. With more capacity, network congestion decreases, which typically leads to lower fees for users.

What are the different types of sharding used in networks like Ethereum and Zilliqa?

Common approaches include state sharding, where each shard maintains a unique portion of the entire blockchain’s state, and transaction sharding, which only distributes the processing of transactions. Projects like Zilliqa employ network sharding to group validators, while Ethereum’s roadmap includes advanced methods like proto-danksharding.

Is security compromised when a blockchain is split into shards?

Security remains a top priority. Robust consensus mechanisms and cryptographic protocols are designed to maintain data consistency and protect against threats like single-shard attacks. Techniques such as frequent random reassignment of validators to different shards help preserve the system’s overall fault tolerance and integrity.

Can smart contracts function effectively in a sharded environment?

Yes, but it requires careful design. Smart contracts can be deployed on specific shards. Ensuring they can communicate and execute transactions across different shards securely is a key challenge that solutions like cross-shard communication protocols are built to address.

What is cross-shard communication and why is it important?

Cross-shard communication refers to the protocols that allow different shards to exchange information and validate transactions that involve assets or data from multiple shards. It is crucial for maintaining a cohesive and unified system, ensuring that the entire network operates as one secure ledger.

No comments yet