The digital asset landscape moves at a relentless pace. Manual monitoring of price shifts is often impractical for modern investors. Automated systems offer a powerful solution for capturing value around the clock.

This guide provides a structured path for creating your own automated assistant. We focus on practical steps, from foundational ideas to advanced techniques. You will learn to construct a system that operates independently.

Python serves as an excellent foundation for this project. Its vast ecosystem includes libraries for data analysis and machine learning. These tools simplify connecting to exchanges and implementing smart strategies.

Whether you are new to algorithmic concepts or an experienced coder, this resource is designed for you. It covers essential components like data collection, strategy coding, and risk management. The goal is a robust and effective automated solution.

Key Takeaways

- Automation is crucial for success in the 24/7 cryptocurrency markets.

- Python provides the necessary tools and libraries for efficient development.

- A functional system requires data feeds, strategy logic, and execution mechanisms.

- Proper risk management protocols are essential for protecting your capital.

- This guide is suitable for both beginners and experienced developers.

- You will learn to backtest strategies before deploying them with real funds.

- The final product can adapt to changing market conditions.

Introduction to AI Crypto Trading Bots

Modern financial technology offers unprecedented capabilities for market engagement. Automated systems represent a significant advancement in how participants interact with digital assets.

These tools operate continuously, scanning multiple exchanges simultaneously. They execute decisions based on predefined parameters without emotional interference.

Overview of Automated Crypto Trading

Traditional automated systems relied on fixed rules and static algorithms. They followed simple instructions like buying when prices crossed specific thresholds.

This approach worked in predictable environments but struggles with volatile conditions. Cryptocurrency markets demand more adaptive solutions.

AI-powered platforms create a fundamental shift in capability. Instead of rigid rules, they learn from market data and sentiment analysis.

The Role of Python in Transforming Trading Strategies

Python has emerged as the dominant language for developing sophisticated trading software. Its extensive library ecosystem provides powerful tools for financial analysis.

The language offers accessibility for beginners while supporting advanced development needs. Specialized frameworks streamline the creation of institutional-grade systems.

Machine learning libraries enable continuous strategy improvement through experience. This combination makes Python ideal for creating adaptive market solutions.

Understanding the Cryptocurrency Trading Landscape

Navigating the cryptocurrency market requires a deep appreciation for its unique, non-stop nature. This digital arena operates globally, without the opening and closing bells of traditional finance.

Price movements can be dramatic, often swinging 10-20% in minutes. These shifts are frequently triggered by news, regulatory updates, or large investor actions.

Market Conditions and Volatility Insights

The relentless pace of these markets makes automated tools essential. They capture opportunities that arise at any hour. Static systems struggle with sudden changes in market sentiment.

Intelligent platforms, however, can recognize context and adapt. This adaptability is crucial for sustained success.

Market regimes vary significantly, impacting strategy performance:

- Bull markets feature sustained upward price trends.

- Bear markets involve prolonged downward pressure.

- Sideways markets see prices move within a narrow range.

A strategy that excels in one condition may fail in another. Understanding these dynamics is fundamental. For those seeking a ready-made solution, exploring the best free AI crypto trading bots can be a valuable first step. Volatility stems from many factors, including regulatory uncertainty and market sentiment.

how to build AI crypto trading bot Python

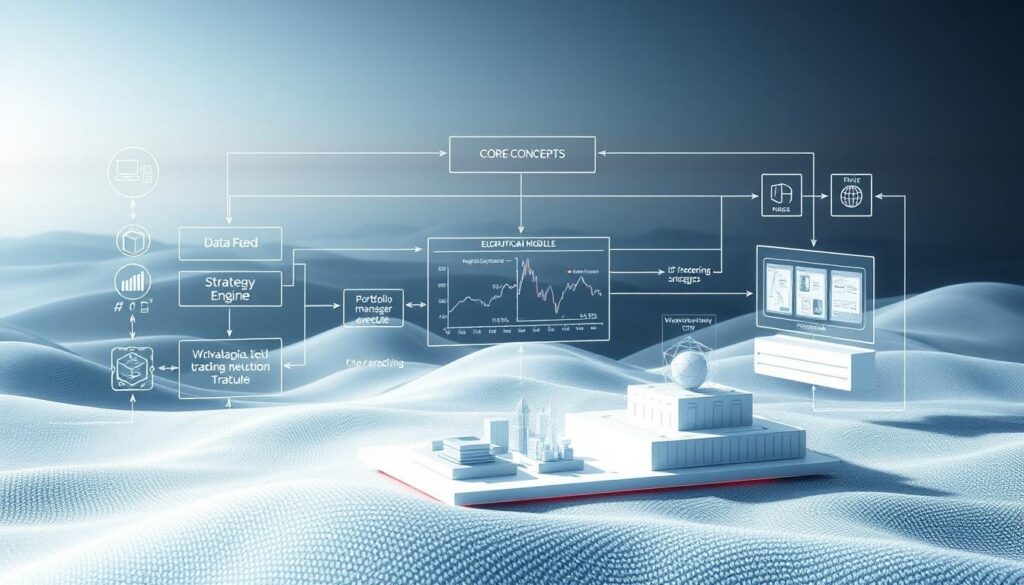

The journey toward automated market participation starts with core conceptual knowledge. Understanding what makes these systems work is the first step toward creating your own.

Core Concepts and User Intent Explained

Automated financial systems operate through interconnected components. They gather market information, analyze opportunities, make decisions, and execute orders.

People seek this knowledge for various reasons. Some want to remove emotion from their decisions. Others aim to scale their existing approaches.

Basic programming skills are essential for getting started. Familiarity with variables and functions provides a solid foundation. You don’t need expert-level expertise to begin.

The learning process follows a logical progression. Start with simple rule-based approaches before advancing. Historical testing helps validate your methods safely.

Always begin with simulated trading mode. This allows you to understand system behavior without financial risk. Proper testing ensures your creation works as intended.

Market understanding develops alongside technical skills. The combination creates powerful automated solutions. Each component builds toward a complete system.

Essential Components of an AI Trading Bot

The architecture of a successful trading system comprises multiple specialized modules handling different functions. These components work together to process information and execute decisions.

Each layer serves a distinct purpose while maintaining communication with other modules. This integrated approach ensures comprehensive market coverage.

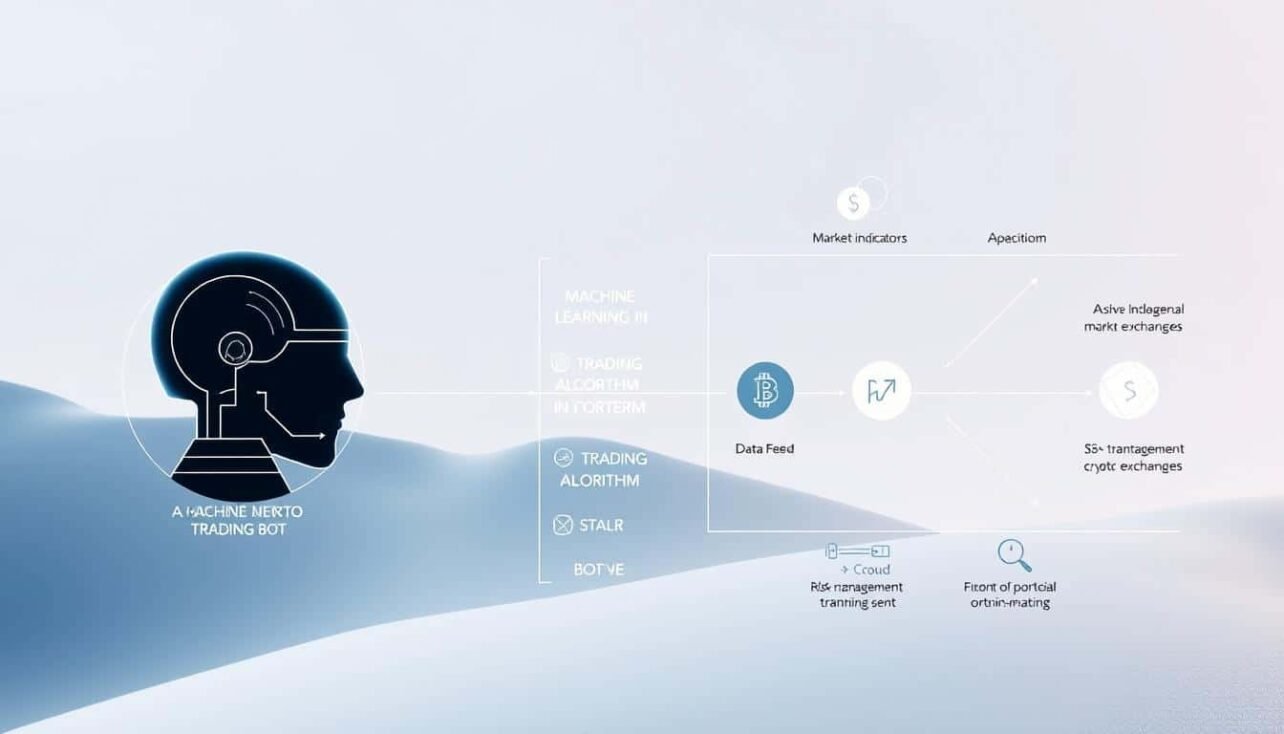

Data Collection and Market Data

The foundation rests on robust data gathering capabilities. Systems continuously collect three types of critical information.

Historical market data provides training material for predictive models. Real-time feeds deliver current prices and order book depth. Sentiment analysis monitors news and social media for psychological context.

This comprehensive data approach enables informed decision-making. The quality of information directly impacts system performance.

Integrating Machine Learning and Risk Management

Advanced systems transform raw data into actionable intelligence. Machine learning models identify patterns and predict movements.

Classification algorithms recognize different market conditions. Neural networks discover complex relationships beyond traditional analysis.

Risk management represents the most critical safety component. Position sizing algorithms determine capital allocation per trade. Stop-loss mechanisms prevent catastrophic losses during volatility.

Effective protocols ensure capital preservation during losing streaks. This integration creates a balanced approach to automated trading.

The Technology Stack Behind AI Trading Bots

The choice of development tools and frameworks directly impacts the performance and scalability of trading platforms. A well-designed technology foundation enables efficient market data processing and rapid decision execution.

Utilizing Python, FastAPI, and MongoDB

Python serves as the primary programming language for these sophisticated systems. Its extensive ecosystem includes powerful libraries for financial analysis and machine learning applications.

Essential tools like pandas handle time-series data manipulation effectively. NumPy provides numerical computing capabilities, while specialized frameworks offer unified exchange connectivity.

FastAPI powers the backend with exceptional performance characteristics. This framework delivers asynchronous request handling for minimal latency. Built-in validation prevents errors during high-speed operations.

MongoDB manages the massive volumes of unstructured market information. It stores historical prices, news sentiment scores, and trading logs efficiently. This database solution handles diverse data types without rigid schema constraints.

Docker ensures consistent deployment across different environments. Containerization eliminates compatibility issues between development and production systems. Additional components like Ollama process news sentiment through language models.

The complete technology stack creates a robust foundation for automated market participation. Each component contributes to the overall reliability and performance of the financial software.

Implementing Cutting-Edge Trading Algorithms

Strategic implementation separates basic automation from truly intelligent market participation. The core intelligence lies in algorithms that process multiple data streams simultaneously.

Effective approaches evaluate technical patterns, market sentiment, and volume dynamics. This multi-factor methodology creates robust decision-making frameworks.

Simple vs. Advanced Trading Strategies

Basic systems use straightforward rules like moving average crossovers. These approaches offer transparency and computational efficiency.

Advanced methodologies incorporate dozens of data points. They analyze technical patterns alongside sentiment and correlation relationships.

Sophisticated systems adapt their parameters based on market conditions. This flexibility maintains performance across different environments.

Leveraging Technical Indicators and Sentiment Analysis

Technical tools form the foundation of most algorithmic approaches. Momentum indicators identify overbought conditions. Trend-following tools reveal directional bias.

Sentiment analysis provides crucial psychological context. It processes news articles and social media discussions. This reveals the human psychology driving price movements.

The most successful strategies combine multiple analytical approaches. They balance technical signals with sentiment context and risk management rules.

Managing Trading Fees and Cost Efficiency

Fee management often determines the difference between profitable and unprofitable automated strategies. Even small percentages accumulate significantly over numerous transactions.

Understanding Fee Structures and Impact

Exchanges employ various fee models that affect overall costs. The maker-taker system creates different rates based on order type.

Limit orders typically qualify for lower maker fees. Market orders incur higher taker charges. This distinction impacts strategy selection.

| Exchange | Maker Fee | Taker Fee | Volume Tier |

|---|---|---|---|

| Binance | 0.08% | 0.10% | Basic |

| Coinbase | 0.40% | 0.60% | Standard |

| Kraken | 0.16% | 0.26% | Starter |

| KuCoin | 0.10% | 0.10% | Default |

Minimizing Costs with AI Optimization Techniques

Advanced systems analyze transaction expenses alongside market opportunities. They prioritize high-probability executions that justify costs.

Strategic optimization reduces total trade frequency while maintaining performance. This approach minimizes cumulative fees significantly.

Platform selection and order timing further enhance cost efficiency. These considerations collectively improve net returns for systematic participants.

Setting Up Your Bot Environment

Establishing a proper development environment marks the critical first step in creating reliable automated financial software. This foundation ensures your system operates efficiently without technical interruptions.

Installation Process with Docker and Freqtrade

Docker provides the most straightforward installation method across different operating systems. It containerizes the entire environment, eliminating dependency conflicts.

The process begins with creating a user data directory and downloading configuration files. Pulling the Freqtrade image initializes the complete directory structure.

| Method | Complexity | Platform Support | Dependency Management |

|---|---|---|---|

| Docker Installation | Low | Windows, MacOS, Linux | Automatic |

| Direct Installation | Medium | Linux, MacOS, Windows | Manual |

| Virtual Environment | Medium | All Platforms | Semi-automatic |

Configuring User Directories and Tools

Proper organization separates strategies, data, and results into distinct folders. This structure manages trading algorithms and historical information effectively.

Initial configuration requires defining exchange connections and risk parameters. Always begin with dry-run mode to simulate trades without financial risk. This testing phase identifies issues before committing capital.

This software is for educational purposes only. Do not risk money you cannot afford to lose. USE THE SOFTWARE AT YOUR OWN RISK.

Backtesting and Validating Your Strategy

Before deploying capital, thorough examination of your methodology using past market conditions provides essential insights. This validation process tests your approach against historical scenarios to reveal potential strengths and weaknesses.

Interpreting Backtesting Reports

Backtesting generates comprehensive reports with critical performance metrics. Understanding these numbers helps assess your system’s reliability.

Key indicators include total profit percentage and average profit per trade. The win rate shows what percentage of executions were successful. Maximum drawdown reveals the largest loss experienced during testing.

| Metric | Purpose | Ideal Range | Risk Indicator |

|---|---|---|---|

| Total Profit % | Overall return assessment | Positive and consistent | Low volatility preferred |

| Win Rate | Success frequency | Above 50% | Higher indicates consistency |

| Avg Trade Duration | Capital commitment time | Strategy-dependent | Shorter may reduce risk |

| Max Drawdown | Worst-case loss | Below 20% | Lower is safer |

Using Historical Data to Improve Performance

Quality historical information spans different market environments. Testing across bull, bear, and sideways conditions ensures robustness.

Multiple timeframes and asset pairs reveal how your approach adapts. This comprehensive testing identifies weaknesses before live deployment. The goal is consistent performance across various scenarios.

Effective validation involves analyzing risk-adjusted returns alongside pure profitability. This balanced assessment creates more reliable automated systems for market participation.

Optimizing Bot Performance Through Data Analysis

Continuous improvement of automated market participants depends on thorough evaluation of execution patterns. Systematic review of multiple dimensions reveals specific areas needing enhancement.

Comprehensive metrics collection forms the foundation for meaningful analysis. Detailed trade logs capture entry and exit prices, timestamps, and position sizes. This information reveals the market conditions triggering each decision.

Performance attribution analysis identifies which strategy components drive returns. It determines whether profits come from trend-following or mean-reversion approaches. Understanding these patterns enables targeted refinements.

Statistical techniques uncover important behavioral characteristics. They reveal whether returns follow normal distributions or exhibit extreme outcomes. This analysis helps assess whether results stem from skill or random chance.

Optimization requires careful balance between improvement and overfitting risks. Validation techniques like walk-forward analysis ensure changes work across different environments. Continuous monitoring detects performance degradation early.

The enhancement process follows an iterative cycle. It involves hypothesis formation, implementation, rigorous testing, and careful deployment. Each iteration builds toward more reliable automated systems.

Integrating APIs and Ensuring Exchange Connectivity

Reliable exchange connectivity separates functional automation from theoretical concepts. This connection enables your system to interact directly with financial platforms.

Secure integration methods protect your assets and information. Proper credential management prevents unauthorized access to your accounts.

Secure API Integration Methods

API keys require careful permission settings for different operations. Read-only access suffices for market monitoring functions.

Environment variables store sensitive credentials safely. Hardcoded keys create security vulnerabilities in your codebase.

Authentication protocols vary between different platforms. Each request must include proper verification headers.

Managing Real-Time Market Data Flow

WebSocket connections deliver instant price updates and order changes. This approach reduces latency compared to repeated polling.

Efficient data parsing minimizes processing delays. Your system must handle high-volume information streams effectively.

Key technical considerations include:

- Rate limiting policies across different exchanges

- Failover mechanisms for unreliable connections

- Geographic server placement for reduced latency

- Local caching for frequently accessed information

Redundancy systems maintain operation during exchange downtime. Multiple data sources provide backup when primary connections fail.

Latency optimization becomes critical during volatile market conditions. Every millisecond impacts execution quality and final results.

Risk Management and Regulatory Considerations

Long-term success in algorithmic market participation hinges on disciplined capital protection protocols. These systems must prioritize safeguarding investments above maximizing short-term gains.

Implementing Effective Stop-Loss Strategies

Position sizing algorithms determine appropriate capital allocation for each transaction. They consider account size, asset volatility, and maximum acceptable loss percentages.

Stop-loss mechanisms automatically exit positions before losses become significant. Fixed percentage stops provide straightforward protection. Volatility-based approaches adapt to changing market conditions.

Trailing stops lock in profits as positions move favorably. This balanced approach maintains protection while allowing gains to accumulate.

Maintaining Compliance in Automated Trading

Regulatory requirements vary significantly across different jurisdictions. Some regions have clear frameworks for automated systems. Others operate in evolving regulatory environments.

Compliance features include detailed audit trails of all activity. These records show timestamps, prices, and decision rationale. Proper documentation supports regulatory review when necessary.

Monitoring systems provide essential human oversight of automated operations. Real-time dashboards display current positions and performance metrics. Alert systems notify users of unusual conditions or excessive drawdowns.

Addressing Technical Challenges and System Resilience

Real-world deployment introduces reliability challenges not always apparent during development. Production environments demand robust solutions for handling unpredictable conditions and infrastructure limitations.

Exchange platforms impose strict request limits to protect their infrastructure. Intelligent throttling systems manage these constraints effectively. They prioritize critical operations while respecting platform boundaries.

Handling API Rate Limiting and Latency

Latency optimization becomes critical during volatile periods. Every millisecond impacts execution quality and final outcomes. Geographic server placement reduces communication delays significantly.

Persistent WebSocket connections deliver real-time data with minimal delay. Efficient algorithms process information quickly without computational bottlenecks. These techniques maintain competitive response times.

Robust Error Handling and Performance Monitoring

Comprehensive error management ensures continuous operation during unexpected events. Systems validate incoming data before processing decisions. Graceful degradation maintains core functions when advanced features fail.

Performance tracking covers both financial results and technical metrics. Alert systems notify operators when values exceed acceptable thresholds. This dual monitoring approach detects issues early.

| Error Type | Detection Method | Recovery Action | Impact Level |

|---|---|---|---|

| API Connection Loss | Heartbeat Monitoring | Failover to Backup | High |

| Data Validation Failure | Schema Checking | Request Retry | Medium |

| Resource Exhaustion | Memory Monitoring | Process Restart | Critical |

| Network Latency Spike | Response Time Tracking | Connection Reset | Medium |

Automatic restart mechanisms recover from crashes without manual intervention. Health checks verify component functionality regularly. Redundant data sources provide backup during primary exchange issues.

These resilience measures protect capital during technical problems. They transform experimental code into reliable financial platforms. Proper implementation ensures consistent market participation.

Conclusion

The fusion of computational intelligence with digital asset markets creates powerful tools for modern investors. This guide has walked through the essential components for developing systematic approaches to market participation.

Creating automated financial systems represents an achievable goal for developers with basic programming skills. The journey begins with simple methodologies and progressively incorporates complexity. Thorough testing and realistic expectations about performance remain crucial.

Beyond personal applications, these skills open significant business opportunities. Custom platform development for clients, SaaS solutions, and consulting services represent viable career paths. The demand for expertise in optimizing automated systems continues to grow.

Success requires balancing profit objectives with capital preservation through effective risk protocols. Market conditions constantly evolve, demanding adaptable approaches. Continuous learning ensures your systems remain effective as financial markets develop.

To get started, focus on establishing your development environment and testing with historical data. Remember that all automated participation involves substantial risk. Never commit more capital than you can afford to lose while maintaining realistic expectations about potential profits.

FAQ

What is the primary advantage of using an automated system for cryptocurrency trading?

The main benefit is the ability to execute trades 24/7 based on predefined strategies, removing emotional decisions and reacting instantly to market data and price movements.

Why is Python a popular choice for developing these automated systems?

Python is favored due to its simplicity, extensive libraries for data analysis and machine learning, and strong support for API integration with major exchanges like Binance and Coinbase.

What are the essential components needed to get started with a custom trading bot?

You will need access to real-time and historical market data, a reliable exchange API, a strategy incorporating technical indicators or machine learning models, and a robust risk management framework.

How important is backtesting for validating a trading strategy?

Backtesting is critical. It allows you to simulate your strategy against historical data to assess its potential performance and refine it before risking real capital in live markets.

What role does machine learning play in advanced trading algorithms?

Machine learning can analyze vast amounts of market data to identify complex, non-linear patterns and generate predictive signals, potentially adapting to different market conditions more effectively than static rules.

How can I manage trading fees to protect my profits?

Understanding the fee structure of your exchange is vital. Optimizing trade frequency and size, and choosing cost-efficient platforms, can significantly reduce the impact of fees on overall returns.

What are the key risk management practices for automated trading?

A> Essential practices include implementing stop-loss and take-profit orders, diversifying strategies, carefully managing position sizing, and continuously monitoring the system’s performance.

What technical challenges should I anticipate when running a bot?

Common issues include API rate limiting, network latency, system downtime, and handling errors gracefully. Building a resilient system with proper monitoring and logging is crucial for long-term operation.

No comments yet