This introduction sets the stage for a practical guide that shows how to move from raw price data to model-driven insights.

We define correlation as a core function for understanding how assets move together, on a scale from -1 to 1. Then we preview the workflow: fetch adjusted close prices (for BTC-USD, ETH-USD, LTC-USD, BNB-USD), compute daily returns with pct_change().dropna(), and display a correlation matrix with seaborn heatmaps.

The article frames intent for a U.S. audience: use data to guide trading, manage risk, and find assets that tend to rise or fall together in cryptocurrency markets. We highlight why this matters: volatile prices often move in sync, which affects diversification and hedging choices.

Finally, we introduce time series modeling next. From simple baselines to gradient-boosting and recurrent networks, we compare models on RMSE and show how rolling windows keep estimates current. Readers will get a how-to path they can reproduce.

Key Takeaways

- Correlation measures co-movement on a -1 to 1 scale and guides portfolio choices.

- Workflow: fetch adjusted prices, compute daily returns, build correlation matrices, then model time series.

- BTC and ETH serve as benchmarks; altcoins reveal broader market links.

- Advanced models like gradient-boosting and RNNs can beat simple baselines on RMSE for volatile series.

- Use rolling windows to track evolving market relationships over time.

Why correlations matter in cryptocurrency markets

Measuring co-movement between major coins reveals when a market-wide swing is underway. Traders use this insight to time entries, set hedges, and improve diversification across assets.

User intent: data-driven trading, diversification, and hedging

Correlations help identify which coins tend to move together or apart over time. Exchanges like Coinbase surface trend indicators based on Pearson’s function over recent USD order books (for example, a 90-day window).

How co-movements shape BTC and ETH performance

BTC and ETH often anchor the broader market. Rising correlation between these two and smaller coins means hedges may fail because prices move in sync.

- When correlations rise, diversification value falls.

- When they fall, you can regain diversification and pair assets for relative-value plays.

- Re-estimate correlations frequently; series behavior changes across regimes.

| Signal | Implication | Action |

|---|---|---|

| High correlation | Market-wide moves | Tighten risk limits |

| Low correlation | Idiosyncratic moves | Increase pair selection |

| Shifting correlation | Regime change | Re-run models and tests |

For methods to analyze correlations over time, see recent research. Combine these signals with volatility, liquidity, and execution checks before trading.

Core concepts: correlation, randomness, and time series behavior

Measuring linear ties between return series reveals whether assets rise in sync or diverge. The Pearson r is a simple function that maps co-variation of two return series to a bounded number between -1 and 1. Negative values imply opposite moves, zero implies no linear link, and positive values imply same-direction moves.

Crypto price series are often nonstationary: means and variances shift, and volatility clusters. In practice, many coins behave close to a random walk, where today’s price ≈ yesterday’s price plus noise.

Short-lived autocorrelation can appear in returns. That makes rolling windows and robust estimators vital. Use ACF/PACF plots and unit-root tests to diagnose stationarity, and compute rolling correlation to track evolving links over time.

- Validate models with time-aware splits to avoid leakage.

- Ensure enough observations: fat tails increase the sample number needed to stabilize estimates.

- Nonlinear methods, including neural networks and recurrent neural approaches, can capture complex dependencies but need strong regularization.

| Check | Tool | Purpose |

|---|---|---|

| Autocorrelation | ACF / PACF | Detect serial dependence |

| Stationarity | Unit-root tests | Assess fixed moments |

| Changing links | Rolling estimates | Track evolving relationships |

Data setup: pulling closing prices and returns for BTC, ETH, and various cryptocurrencies

Start by assembling a clean panel of adjusted close prices for each coin over a fixed date range. Use yfinance to download tickers like [‘BTC-USD’, ‘ETH-USD’, ‘LTC-USD’, ‘BNB-USD’] for 2023-01-01 to 2024-01-01 and select the Adj Close field.

Fetching adjusted closing prices with Python (yfinance, pandas)

Download the adjusted closing prices, then compute daily returns with pct_change().dropna(). The adjusted closing price ensures a consistent final price series for each asset.

Choosing assets, time windows, and handling missing data

Start with btc and eth as anchors, then add LTC and BNB to capture breadth across various cryptocurrencies. A full year gives more stable estimates, while shorter windows react faster to regime shifts.

- Align series: keep UTC timestamps and a common index.

- Missing data: forward-fill small gaps or drop dates with sparse coverage.

- Save artifacts: store raw prices, aligned panels, and returns for reproducibility.

| Choice | Why | Action |

|---|---|---|

| Tickers | Cross-section | BTC, ETH, LTC, BNB |

| Window | Stability vs. recency | 1 year default |

| Missing | Integrity | Forward-fill or drop |

From prices to signals: daily returns, normalization, and rolling windows

Convert daily closing series into percentage returns to turn prices into actionable signals. This keeps models focused on change rather than level and reduces issues from differing price scales.

Calculating percentage returns and dropping NaNs

Use pandas: pull the Adj Close column, then call pct_change(). The first row becomes NaN; remove it with dropna().

Verify that all assets share the same index. Aligning time stamps prevents biased estimates caused by missing trading days.

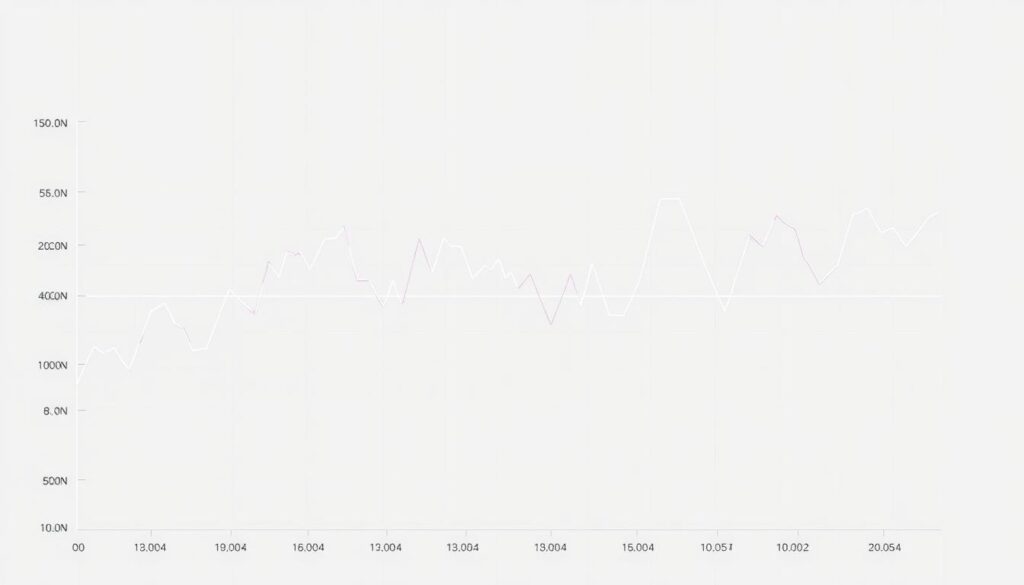

Rolling correlations to detect shifting market regimes

Apply rolling windows (for example 30 or 90 days) with rolling(window, min_periods=<n>).corr(). Short windows react fast but are noisy. Longer windows are smoother but lag.

- Save outputs with timestamps to compare subperiods and support model selection.

- Plot heatmaps over rolling slices to reveal regime shifts visually.

- Record window size and min_periods; the number of observations per window affects confidence in each value.

Why closing prices and time alignment matter

Closing price choices and synchronized time indexes ensure comparable returns across assets. Misaligned calendars or gaps distort metrics and downstream models.

| Step | Why | Tip |

|---|---|---|

| pct_change() | Creates return series | dropna() the first row |

| rolling() | Captures dynamics | Choose 30–90 day windows |

| Alignment | Reduces bias | Resample or forward-fill small gaps |

machine learning cryptocurrency correlation analysis

A clear heatmap turns a table of numbers into a visual map of co-movement across coins.

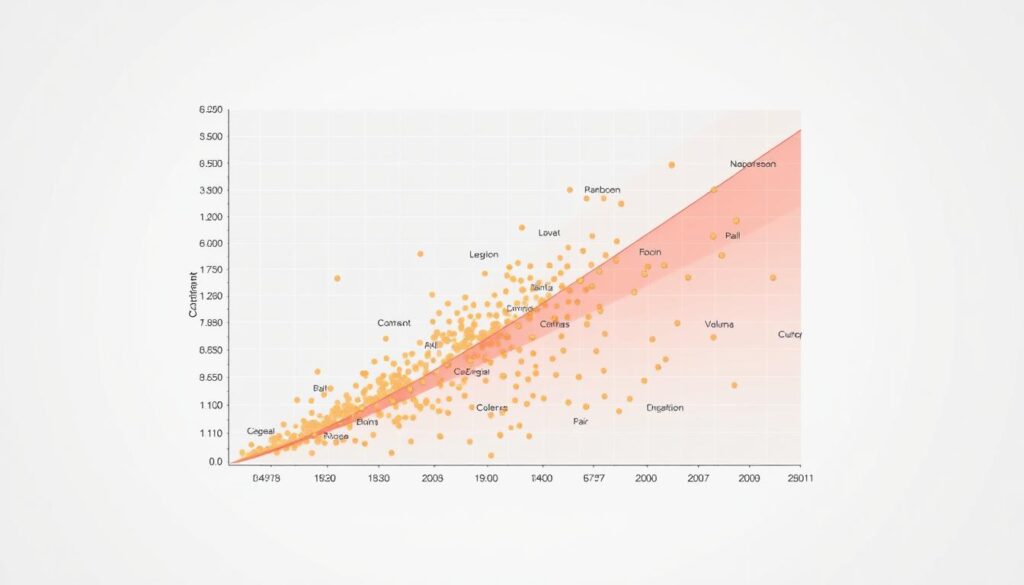

Visualizing correlation matrices and heatmaps

Calculate a correlation matrix from daily returns and render it with seaborn.heatmap. Use a coolwarm colormap and annot=True to show coefficient values on each cell.

Label axes with tick labels from your asset list and sort by average correlation to surface clusters. Keep the number of assets under 25 to avoid overcrowding the plot.

Finding high positive and negative pairs across markets

Scan the matrix for strong positive and negative pairs. Flag values below -0.7 or above 0.7 as candidates, but treat thresholds as heuristics to validate with backtests.

- Export the matrix as CSV for audit and to feed into models that use cross-asset features.

- Compute matrices over multiple rolling windows to see stability or drift in relationships.

- Record BTC/ETH pair stats to track how flagship assets co-move with others over time.

| Step | Why | Tip |

|---|---|---|

| Build matrix | Summarizes pairwise ties | Use daily returns from closing prices |

| Render heatmap | Visual interpretation | coolwarm + annot=True |

| Shortlist pairs | Candidate strategies | Apply thresholds & validate with tests |

Note: correlation does not imply causation. Use these visual signals to prioritize deeper tests, model features, and risk controls before trading.

Modeling choices: statistical methods vs. machine learning and deep learning

Start modeling with simple baselines to set a clear performance floor before moving to more complex approaches. Baselines include mean/median predictors and simple regression fits. They expose data quirks and define a minimum RMSE to beat.

Gradient-boosted trees often excel on high-variance return series. XGBoost provides scalable, efficient tree building. LightGBM uses GOSS and EFB for speed and memory gains. CatBoost’s ordered boosting helps reduce prediction shift with small samples.

Recurrent neural networks like LSTM and GRU model sequential dependence when many lags matter. Deep architectures can capture nonlinear temporal patterns but need careful regularization, early stopping, and dropout to avoid overfitting on noisy returns.

- Validation: use time-aware splits and rolling-origin tests.

- Hyperparameters: trees: 100–1,000, depth 4–8, learning rate 0.01–0.1; nets: 32–256 hidden units, dropout 0.1–0.5.

- Trade-offs: GBMs give feature importance; deep models need SHAP or integrated gradients for interpretation.

Decision framework: begin with baselines, escalate to GBMs for structured tabular features, and consider deep learning only when sequence depth and data volume justify the compute and latency costs.

Feature engineering for forecasting cryptocurrency prices

Good features turn noisy price feeds into stable signals that models can act on. Start with compact, time-aware transforms that keep timestamps aligned for BTC and ETH so you avoid leakage.

Lagged returns, rolling stats, and cross-asset inputs

Specify lag features such as 1, 3, 5, and 10-day returns. Add rolling measures: 7/30/90-day mean, rolling volatility, and z-scores to capture changing scale in the series.

Include cross-asset signals from a set of 14 altcoins (ADA, BAT, BNB, DASH, DOGE, LINK, LTC, NEO, QTUM, TRX, XLM, XMR, XRP, ZEC) to help models learn market-wide moves.

Market microstructure and transforms

Use volume and market-cap proxies to capture liquidity shifts. Apply log transforms and standard scaling to stabilize variance and aid both tree and neural models.

- Limit the number of features to avoid overfitting; use regularization and feature selection.

- For networks, build windowed tensors that preserve temporal order.

- Validate with out-of-sample tests and ablation studies; save feature metadata (lags, windows).

| Feature | Purpose | Example |

|---|---|---|

| 1/3/5/10-day lags | Serial dynamics | r_t-1, r_t-3 |

| Rolling vol / z-score | Scale & regime | 30-day std, z(r) |

| Altcoin signals | Market moves | Avg return of top 14 |

Building and evaluating forecasting models for BTC/ETH with correlated altcoins

Practical forecasting starts with time-aware splits that prevent future data from leaking into training.

Train on earlier periods and validate on later ones. Use rolling-origin or expanding-window splits so test sets always follow training sets in time. This mirrors live deployment and reduces overly optimistic results.

Optimize models against RMSE on returns and also track cumulative strategy returns in simple backtests. Compute daily portfolio return as long-minus-short signal and cumulate via cumprod(1 + r). Use regression baselines to set a floor for improvement.

- Compare GBMs (XGBoost, LightGBM, CatBoost) to recurrent neural baselines (LSTM/GRU) using the same splits and features.

- Inspect GBM feature importance to see which altcoins and lags drive predictions for btc and eth and to detect any correlation btc signals the model uses.

- Limit hyperparameter trials, stress test across volatile windows, and document artifacts for reproducibility.

| Metric | Why | Action |

|---|---|---|

| RMSE | Statistical fit | Optimize on returns |

| Cumulative PnL | Practical impact | Backtest signals |

| Feature importance | Interpretability | Guide feature pruning |

From analysis to action: pairs trading, hedging, and risk controls

Turn statistical signals into tradable tactics by screening pairs with strong positive or negative relationships and then defining clear entry rules.

Identifying negatively and positively correlated pairs

Scan rolling matrices to shortlist pairs beyond chosen thresholds (example: below -0.7 or above 0.7). Focus on liquid coin pairs so you can enter and exit without large market impact.

Signal rules, backtesting, and transaction costs

Example rule: if BTC moves down more than 1% while ETH rises 1%, go long ETH and short BTC the next day. Calculate next-day long-minus-short returns and cumulate via (1 + daily_return).

- Backtest on out-of-sample splits and plot cumulative returns.

- Include realistic fees, bid-ask spreads, and slippage in each trade leg.

- Filter signals with models to reduce false entries and improve sizing.

Drawdown management and regime shifts

Protect capital with stop-loss limits, position caps, and volatility scaling. If correlations shift or gaps appear, disable rules until stability returns.

| Control | Purpose | Example |

|---|---|---|

| Stop-loss | Limit tail risk | 2–4% per pair |

| Max exposure | Manage concentration | 5% portfolio per pair |

| Model filter | Reduce noise | Only trade if predicted edge > threshold |

Final notes: stress-test strategies across historical shocks, use rolling shrinkage methods to estimate stability, and maintain clear logs so trading remains auditable and adaptive to market regime changes.

Key takeaways and practical next steps for your analysis

Start with clean closing price panels to capture persistent co-movements across BTC, ETH, and other coins. That reliable base helps you build cross-asset features that improve price forecasting versus simple baselines.

Next steps: expand coverage to various cryptocurrencies, engineer lagged and rolling features, and compare forecasting models with time series splits and strict logging. Begin with regression and GBM baselines, then benchmark against neural networks and deep learning approaches.

Operational must-haves: stable pipelines for closing price ingestion, aligned timestamps, and cost-aware backtests with slippage and fees. Monitor correlations and alert on regime shifts that can erode performance in btc eth pairs.

Deliverables: a reproducible notebook, saved models, experiment logs, and monthly model reviews. Keep documentation clear so your study is repeatable and auditable.

No comments yet